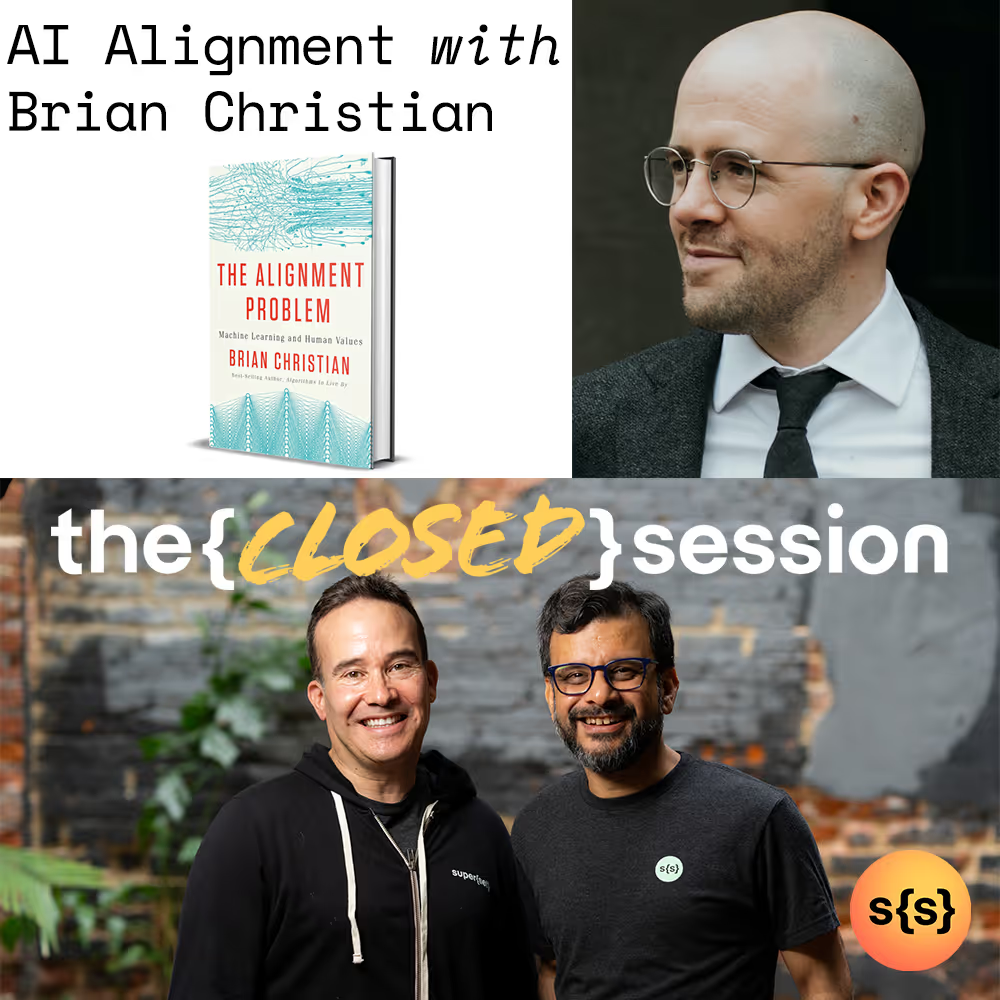

AI Alignment with Brian Christian of 'The Alignment Problem'

An acclaimed author and researcher who explores the human implications of computer science, Brian Christian is best known for his bestselling series of books: "The Most Human Human" (2011), "Algorithms to Live By" (2016), and "The Alignment Problem" (2020). The latter explores the ethical issues in AI, highlighting the biases and unintended outcomes in these systems and the crucial efforts to resolve them, defining our evolving bond with technology. With his deep insights and experiences, Brian brings a unique perspective to the conversation about ethics and safety challenges confronting the field of AI.

Tech, startups & the big picture

Subscribe for sharp takes on innovation, markets, and the forces shaping our future.

More Episodes

Explore additional conversations with entrepreneurs, investors, and leaders shaping the future of tech and business.